[Edits, 7/28/2022: There were a couple of technical clarifications required]

Let me start off by saying “I HATE FTP!”

It was developed in the early 1970’s, before the Internet was really the Internet, and before the existence of firewalls or any other type of network security. Over the last nearly-50 years, it has picked up so many band-aids that the entire protocol is now just basically one big kludge.

Despite its shortcomings, and despite the availability of newer and far superior file transfer tools, banks, governments, and anyone else with a mainframe continues to use it.

So, unfortunately, people like you and I get stuck supporting it.

If you’re having an FTP problem, or are just morbidly curious to learn about an antiquated protocol, read on…

Table of Contents

Overview of FTP

In a normal FTP conversation, the client connects to the server using TCP/IP port 21, which is the well-known port for FTP. Once the connection is established, the server prompts the client to authenticate, which can be done in a number of ways, but the most standard is simply for the client to supply a username and password.

Once authenticated, the client uses this channel, called the control channel, to tell the server what it wants to do. Typically, the client would issue a series of commands to set up the session properly. For example, issuing the BIN command tells the server to use BINary (8-bit) mode because most FTP servers default to ASCII (7-bit) mode.

Tip: If you forget to switch to binary mode, the server will strip off the 8th bit of every byte in your file before sending it to you. Most GUI FTP clients do this for you, but if you use command-line FTP and you keep downloading corrupt files, this might be the reason.

Why? Because FTP was developed BEFORE the IBM “PC-8” character set, and only the first 127 (7 bits) characters were defined by ASCII as a standard across systems. Back in the early ’70’s, most of what you would transfer would be in the form of text, anyway, because most systems didn’t have graphical displays, so there were very few digital pictures, and music and movies didn’t yet exist in a digital format. Even downloading a program – something simple that we do today – usually involved downloading the source code, in text format, and compiling it locally. So back then, nearly 50 years ago, it made perfect sense to default to ASCII transfer mode, which also had the advantage of being compatible with the 7-bit serial and MUX networks that were in common use by terminals and teletype equipment that you might be using to access the host. This allowed you to view or print a file as it was downloading – probably across a very slow TCP/IP network.

Also, ASCII mode was intended to tell the FTP host to translate the file from any other local encoding system in to ASCII as it was downloaded. This was convenient for transferring files to and from IBM mainframe hosts, which used EBCDIC instead of ASCII encoding.

Tip: If you are downloading text files from an IBM mainframe, use ASCII mode, or use BIN mode and then run the file through an EBCDIC to ASCII converter. To check if the file has been converted properly, open the file in a hex editor.

- 0 through 9 in ASCII are 0x30 through 0x39. In EBCDIC, they are 0xF0 through 0xF9 (0x here means “hex”)

- A through Z (uppercase) in ASCII are 0x41 through 0x5A. In EBCDIC, they are non-contiguous. A through I are 0xC1 through 0xC9, J through R are 0xD1 through 0xD9, and S through Z are 0xE2 through 0xE9

- a through z (lowercase) in ASCII are 0x61 through 0x7A. Because they are exactly 0x20 (32 decimal) apart, a cool trick in ASCII is that you can convert to lower by bitwise OR’ing 32. Likewise, if you bitwise AND 223, which is 255-32, you convert to upper. In EBCDIC, like the uppers, the lowers are non-contiguous. a through i are 0x81 through 0x89, j through r are 0x91 through 0x99, and s through z are 0xA2 through 0xA9.

Because ASCII mode strips off the 8th bit, you might get a file that was originally EBCDIC, but with the 8th bit stripped off. A, which is 0xC1 in EBCDIC, becomes 0x41 – at first this seems like good news, but this only works for A-I. J becomes 0x51 (“Q”), etc… The numbers 0 through 9 become 0x71 through 0x79 (Lower “q” through “z”).

Tip: If you view the file in a text editor and you see letters where numbers are expected, or you see letters but they are the wrong letters, you might be getting a “stripped” 7-bit EBCDIC file instead of ASCII. Try BIN mode.

Once the session is configured by the client through the control channel, the next step is to establish a connection for the data channel, which is where things get weird.

“Normal” (Active) Mode

In “Normal” (Active) mode, the server establishes the data connection back to the client.

So the control channel goes from the client to the server, and the data connection goes from the server to the client.

I have no doubt that in early FTP implementations, the server simply sent the connection to the client on TCP/IP port 20, which is the default port used for this purpose.

This probably worked great when there were like 10 FTP servers in the entire world, but if a client wants to connect to more than one FTP server at a time, that simply won’t work. Each server connection back to the client would need to use port 20, which means that the client would also have to be running an FTP server in order to maintain multiple connections, even if they just wanted to download one file from one server, and there are also all sorts of permission issues that simply didn’t exist back then, etc.

Instead, somewhere along the way, one of the many, many band-aids added to FTP was the PORT command, which tells the server where to send the data connection.

So before the client can get a directory listing or even view a file, the client issues the PORT command, which contains the client’s IP address as well as the TCP/IP port number that the client expects the server to use. On most systems, ports 1 through 1023 are reserved, and sometimes a user needs elevated privileges just to run a process that listens on a reserved port. Instead, most programs that run in user space make use of the “ephemeral” port range, which is a designated range of port numbers in the high port range (1024 to 65535) that are specifically allocated for temporary or “ephemeral” use. For example, your computer might have allocated ports 45000 through 59000 for ephemeral use. This allows the FTP client program to grab one (usually allocated by the system) and then tell the server to connect using whatever port it happened to grab.

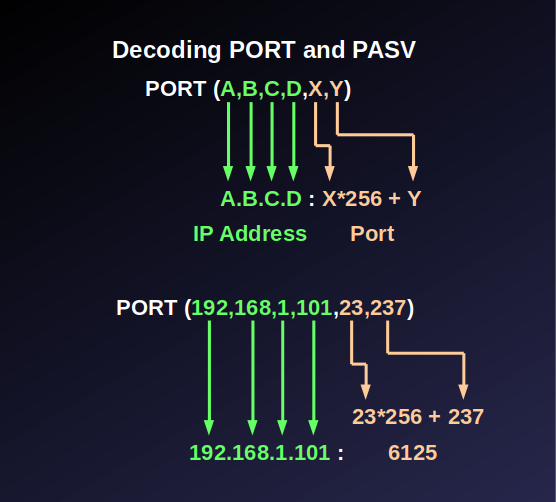

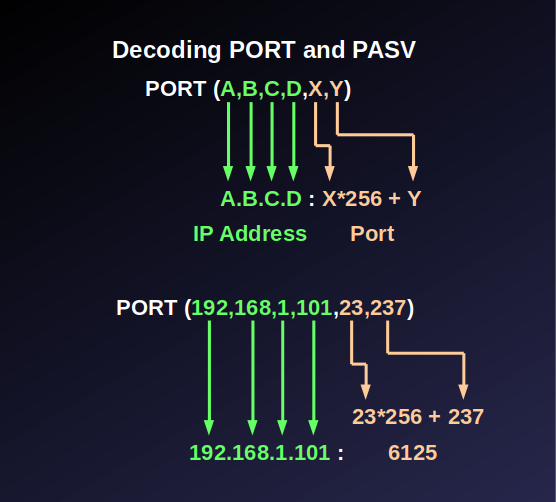

So the FTP client tells the server, “PORT(A,B,C,D,X,Y)”, where A.B.C.D is the IP address, and X*256+Y is the TCP/IP port where the client expects to receive the connection.

We’ll discuss firewalls and some other nuances later, but for now, once the server makes the data connection, you should be able to run a command such as netstat and see the two connections, which should look like this:

| Channel | Client | Server |

| Control | Source: Ephemeral | Destination: TCP/21 |

| Data | Destination: PORT | Source: Ephemeral |

One of the things I absolutely despise about FTP is that there are so many standards, which basically means there are no standards. For example, after the client issues the PORT command, the server might wait around until the client actually downloads something before it establishes the connection. Also, the client might not issue the PORT command right away – it might wait around until it needs something. There are no rules that say “the client MUST issue the PORT command right away, and the server MUST respond immediately by establishing the data connection”.

Since the newest client (with all of the latest band-aids) must support the oldest server and vice-versa, there is a LOT of variability in “what happens next”, and often these decisions end up being quite arbitrary – it’s basically whatever the developer felt like doing when she wrote the code.

So, whenever the client decides to issue the PORT command, and whenever the server decides to connect to the client, the TCP/IP connection table will look like the one above.

Tip: One way to guarantee that the server tries to connect to the client is to issue the LIST command, which returns a directory listing.

I have no doubt that long ago, the directory listing was probably returned on the control channel, but this creates a problem. For long directory listings, you end up tying up the control channel, which means you have no way to tell the server to “stop” or even sever the connection. So again, one more band-aid I’m sure, was to return the directory listing as a file itself, which keeps the control channel clear.

Passive Mode

Yet another band-aid, “passive” (PASV) mode tells the server to “remain passive”, and the client originates the data connection.

When FTP was first designed, there was no such thing as network security. Networks were supposed to be “free and open”, right? This was before hacking and corporate espionage, and a network was a thing at a university that allowed you to connect to other computers at the university, or to other universities.

The first network firewalls were simple devices that acted as gatekeepers at the edge of a network, and used a simple set of rules to determine who was allowed to connect where, and when.

If your network has a server that you want other people on other networks to be able to access, then the firewall needs to be configured to allow any source IP address to connect to the server’s IP address on the TCP/IP port number that corresponds to the server process. So, for an FTP server, your firewall might have a couple of rules that looks like this:

ALLOW ANY:ANY 1.2.3.4:21 ALLOW 1.2.3.4:20 ANY:ANY

The first rule allows any source IP address on any network using any ephemeral port to connect to the server at IP address 1.2.3.4 on port 21, which is the well-known port for FTP.

The second rule allows the server at 1.2.3.4 to create the data connection originating on TCP/20 to any client IP address on any port.

This works great on the server side, but remember that for FTP to work correctly, the server must be able to open a connection to the client, and the client gets to specify the port number using the PORT command. So if the client is behind a firewall, in order for it to connect to an FTP server, the client’s firewall must have an entry like this:

ALLOW 5.6.7.8:ANY ANY:21 ALLOW ANY:20 5.6.7.8:45000-59000

The first rule allows the client at 5.6.7.8 to connect using an ephemeral source port to any FTP server using well-known port TCP/21.

The second rule allows any IP address on the internet to connect to the client at 5.6.7.8, where the server’s source port is 20, and the client port can be in the range of ephemeral ports (45000 to 59000 is just an example – the ephemeral port range could be literally any range above 1023)

Well, this kind of sucks because the more “holes” you have in your firewall, the less secure it is, and this rule creates 14,000 holes! Since FTP client isn’t the only process that can use ports from the ephemeral port range, this gives a hacker potential access to literally any process that uses an ephemeral port! All the hacker has to do is spam that port range using a TCP source port of 20. When the hacker gets a response, she knows that a process on the client is listening to that port. Commence hacking.

Which is where PASV mode comes in to play.

PASV tells the server NOT to make the data connection. Instead, the intent is for the client to originate the data connection. Now, for the client to access any FTP server on the internet, the client’s rule looks like this:

ALLOW 5.6.7.8:ANY ANY:20-21

This allows the client at 5.6.7.8 using any ephemeral port to first connect to any server on port 21 (control channel), and then establish a data connection to port 20.

At first, this seems like a good solution, but we end up with the same problem we had with the server connecting to the client on port 20, but in reverse. More band-aids later, when the client issues PASV, the server will respond with a blurb:

227 Entering Passive Mode (A,B,C,D,X,Y)

This should be interpreted by the client the same way the server interprets the port command: The client should connect to the server at IP address A.B.C.D on port X*256+Y.

Now, the client needs these rules:

ALLOW 5.6.7.8:ANY ANY:21 ALLOW 5.6.7.8:ANY ANY:1024-65535

The first rule allows the client to initiate a connection from 5.6.7.8 using any ephemeral port to any server on TCP/21. Then, for the data connection, the second rule allows outbound access from the client to any destination IP address on any high port.

If the network administrator wants to block the client from accessing other services such as SMTP (TCP/25), he is excluded from doing so because there is no rule that allows it. Likewise, neither rule allows any inbound access.

Because both connections originate from the client when the server is in PASV mode, the server’s firewall needs rules like this:

ALLOW ANY:ANY 1.2.3.4:21 ALLOW ANY:ANY 1.2.3.4:5000-5200

The first rule allows any client using any source port to connect to the server at 1.2.3.4 using TCP/21, which is the well-known port for FTP.

The second rule assumes that the FTP server has been configured with the high-port range of TCP/5000 to TCP/5200, allowing connections from any source IP address using any source port.

At this point, on the client, the TCP connection table should have entries similar to:

| Channel | Client | Server |

| Control | Source: Ephemeral | Destination: TCP/21 |

| Data | Source: Ephemeral | Destination: PASV Range |

Most FTP server software allows the administrator to configure the high port range, but some older software uses a fixed range (again, to heck with “rules” for FTP).

Tip: If you have intermittent client connectivity issues, where the control channel works just fine, but the data channel in PASV mode periodically fails, check to make sure your FTP server software has the correct high port range configured, and double check with your network administrator that the firewall matches what is configured on the server.

And The Rest

At this point, the client has a successful control channel as well as a successful data channel.

The client can:

- Request a directory listing from the server using the LIST command

- Download a file using the GET command

- Download multiple files using MGET

- Upload a file using PUT

- Upload multiple files using MPUT

Some FTP servers provide resume capability, in the event that the control or data channel are broken during transfer, and others don’t. Some FTP servers allow the client to run shell commands, create or remove folders, delete files, append to files, and the like, while others don’t.

Since there really is basically no standard for FTP, there is no way to guarantee that every server and every client have implemented every feature. In addition, the server’s administrator might have some capabilities intentionally excluded based on user permissions, meaning, if you log in as a regular user, you might not be able to run shell commands or delete files (as an example).

To download multiple files, use the MGET command, or use a GUI client such as FileZilla that allows you to have a download queue.

When the client is done, it disconnects using QUIT or BYE.

And, that’s about it.

Other Stuff

There are a few more features that are worth mentioning, but are not really salient to troubleshooting:

Most client FTP software allows you to run a script, which is a series of commands that get executed in sequence when the client connects to the server.

Unless you use some other form of authentication, the disadvantage here is that the username and password are usually stored as part of the script, and passwords (in particular) should NEVER be stored in cleartext, and NEVER as part of a script.

Here is a sample script that downloads all files in Tim’s home folder, and then removes the files from the server:

CONNECT ftpserver.somedomain.com USER tim PASS ******** BIN CD /tim-home MGET * DELE * BYE

Tip: The best way to troubleshoot an errant script is to connect manually using the specified user, then run each command, one at a time, as they appear in the script file. This helps find typos, where a file name or directory name is incorrect, or permissions issues where the user’s permissions on the server have changed. For example, maybe Tim has permissions to download the files but not delete them.

On the server side, aside from permissions, the user can configure what is called a “virtual root”.

In the example above, when Tim logs in, he gets dropped in to a root folder, presumably with hundreds or thousands of other users’ folders. If the administrator has done their job properly, Tim should only be able to see a folder called “tim-home” if he LISTs the root folder. If he can see other folders, not only could that inadvertently disclose information, it gives Tim the ammunition he needs to try to hack around to get in to those other folders.

Although there are all sorts of things you can do with Windows permissions, most FTP servers run on Linux / Unix, and in Linux / Unix, you have three basic permissions:

- Read

- Write

- Execute

For files, this is obvious – Read allows you to read the file, write allows you to write the contents of the file, and execute allows you to run the file, as in a binary or script file.

So, for a document, you might need Read+Write, but for a script file, you would need Read+Execute.

For a directory:

- Read allows you to see a list of files

- Write allows you to create (or delete) files

- Execute allows you to enter (traverse) the directory itself (cd directory)

So, to maintain confidentiality and reduce hacker-bait, the administrator might set the root folder’s permissions as Execute only. If Tim LISTs the root folder, he won’t see anything, but if he executes CD tim-home, he will find himself in the tim-home directory, where Tim presumably has Read, Write, as well as Execute.

Since Tim can’t see anything in the root folder, this can be confusing, especially to a GUI FTP client that shows the user a “friendly” directory tree. Without Read permissions, however, a GUI FTP client will simply list an empty root folder. In most of these programs, you can set an initial folder as part of the configuration, so the FTP program will explicitly issues CD tim-home (or whatever folder), so that the lack of Read permission for the root folder is irrelevant.

But, there is another option.

Most FTP programs support what is known as a “virtual root”. When the FTP administrator creates the “Tim” user, they can set a virtual root folder, for example, /home/tim-home. When Tim logs in, rather than going to the true root (/home, for example), he is immediately dropped in /home/tim-home, but to Tim, it appears as “/” (root).

This is also known as “root jail”, where the root changes based on the user and / or context, and the user is “jailed” within the virtual root with no access to higher-level folders.

Tip: Pay attention to the folder structure and permissions. Check to see if you can create a file, by uploading a 1-byte “test.txt” file and then deleting it. If a root jail is in play, make sure your permissions work as expected.

How to Decode PORT and PASV

If the client issues a PORT command, it tells the server what IP address and TCP port to connect to for the data channel.

If the client issues a PASV command, the server tells the client what IP address and TCP port to connect to for the data channel.

The first 4 numbers are the IP address. In our example above, 192,168,1,101 is the IP address 192.168.1.101.

The last 2 numbers are the TCP port, where the port number = X*256+Y. In the example above, 23,237 is the port 23*256+237, or 6125.

Encryption: FTP+S, FTPS, and SFTP

Once upon a time, people wanted to do things securely on the internet.

What does “securely” mean?

“Securely” basically means “privately” in this context.

Those people that time ago wanted to be able to buy stuff online by sending their credit card information to a website without anyone else being able copy it while it was being transmitted on the internet.

The analogy I always use is: “Think of the internet as a crowded room”. If I tell you my credit card number in a crowded room, there is ample opportunity for someone nearby to monitor my communication, and thereby obtain my credit card number without my permission.

To prevent this, I’m going to scramble my credit card number in a predictable way, so that ONLY you and I can unscramble it. Now, as I speak the scrambled (or encrypted) information, anyone nearby can intercept it, but they can’t use it unless they can unscramble (decrypt) it.

As you have probably guessed by now, because FTP was designed long ago in an academic environment where there was little consideration for security, other than prompting for credentials (authentication), there is zero security. This means that every bit of security implemented by a modern FTP server and client got there because of more band-aids and fixes to the FTP standard.

With a network monitoring tool such as Wireshark or even just TCPDump, an attacker can capture the network packets transmitted between the client and server, and then use Wireshark to decode them. Without encryption, anyone with physical access to your network can view:

- Your username and password to log in to the FTP server

- Every command you send to the server, and every response from the server

- The name and contents of every file you upload or download

Obviously, if an attacker has your username and password for the FTP server, they have access to any files stored on the server to which your user credential has access. However, most people reuse credentials, which means that the attacker might also have access to your Amazon account, your e-mail, or your bank account, if you happened to use the same username and password for any of those.

A casual observer might not think there is anything special to be learned by watching the commands you send to the server and the server’s responses. However, these can be used to fingerprint the server or the client or both. At minimum, you can probably tell the software name and version of the FTP server, and you might be able to determine the underlying operating system and version, and you might be able to tell the same for the client. A clever attacker might know that the specific FTP server software or operating system version is vulnerable to a specific type of attack – for example, on an unpatched system that contains vulnerable components. Now, the attacker has everything they need in order to try to break in to the server through a vulnerable component, and they could potentially gain access to way more than just what FTP is serving up. They could potentially get root access to the entire server! (If you don’t know what this means, just take my word that it’s really bad)

What about files and filenames? You could easily use a third-party tool such as WinZip or PGP to encrypt the file before you transmit it, which is absolutely a good precaution. However, even if an attacker has just the filename, they might be able to leverage that against you. For example, let’s say you upload a file called “Uncle Tim’s New Will.zip”. The attacker now knows:

- You have an uncle named Tim (good for social engineering or maybe the answer to a security question on your Amazon account?)

- Tim probably has you in his will, which means that the attacker might be able to steal Tim’s money or your money or both

- Tim recently changed his will, which means that something recently changed in Tim’s life, prompting him to update his will. More fodder for social engineering, and maybe even a couple of security answers.

Not to mention the contents of the file itself, if not encrypted, probably contains a goldmine of personal information, including birth dates, social security numbers, drivers’ license numbers, lists of assets, and maybe even bank account numbers, vehicle identification and license plate numbers, and the like.

Again, to see all of this, the attacker has to have physical access to your network, or the network where the server resides. However, if you access a server on a public network, or if your traffic traverses a public network, then you must assume that your traffic is being monitored.

Which takes us back to people who wanted to buy stuff on the internet, and more reasons why FTP is needlessly complicated.

In 1994, the world wide web took us by storm. As the web grew at an exponential rate, people quickly found that they wanted to pay for goods and services over the web, but there really wasn’t a way to do this securely.

Netscape and RSA co-developed “Secured Sockets Layer” (SSL), and version 2.0 was released in 1995. Having had severe security flaws, version 1.0 was never released, and version 3.0 quickly followed 2.0, which had some fairly severe design limitations, but both SSL 2.0 and 3.0 were perfectly serviceable at the time.

Originally designed to secure Hypertext Transfer Protocol (HTTP), SSL creates an encrypted wrapper around the entire TCP session. Since regular HTTP expects to start serving data at the application layer as soon as the client connects, SSL requires a separate port that expects SSL negotiation to occur first, followed by an encrypted session, and then the application layer to begin. Where the regular well-known port for HTTP is TCP/80, SSL established the well-known port of TCP/443 for HTTPS (where the “S” stands for “SSL” or “Secure”, depending on who you ask).

Just to round out the rest of TLS history, TLS 1.0 was released in 1999 as an extension to SSL 3.0, with support for larger keys and modern ciphers. Eventually, TLS 1.1 and 1.2 were released in 2006 and 2008, respectively, where TLS 1.2 is the current standard as of this writing. TLS 1.3 is still in draft, but is being rushed through the standardization process in order to fill a huge security gap created when SSL 3.0, TLS 1.0, and TLS 1.1 were all deprecated a few years ago due to the discovery of various flaws and attacks afforded by advances in computing capability. So, for 10 years, SSL 3.0 and TLS 1.0 dominated the internet security landscape.

Jumping back to 1995 for a minute, when HTTPS was first introduced, it proved the concept quite brilliantly that SSL could protect any kind of data that you could transmit within a TCP session. So immediately, we saw “secure” versions of all of the normal protocols, each with its own “secure” port:

- SMTP (E-mail) uses TCP/25, and “SMTPS” (now deprecated) uses TCP/465.

- LDAP (Directory) uses TCP/389, and “LDAPS” uses TCP/686

- etc…

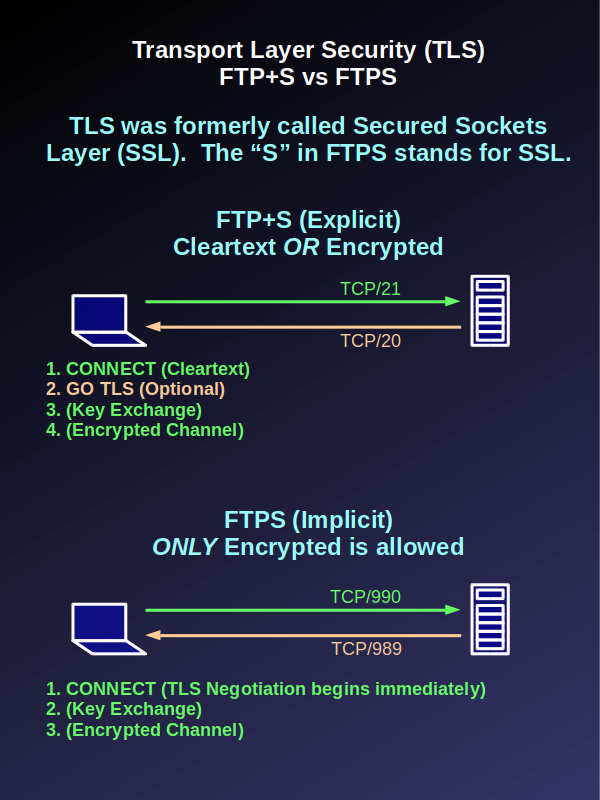

And, like all of these other protocols, FTP has a “secure” version as well, where “FTPS” uses TCP/990. Of course, FTP being an inherently-flawed protocol, you have to have a separate secure TCP port for the data channel, which uses TCP/989.

And, all was well with the world.

People who need security can connect to SSL (or TLS) port 990, while everyone else who is downloading cat memes can simply connect to good old port 21.

Until some ass-hat came up with the idea of explicit TLS.

You see, in my opinion, it isn’t even named correctly. When I think of requiring TLS, I think of that as explicit, as in, if you connect to FTP port 990 you must explicitly use TLS.

Not so!

Apparently, connecting to TCP/990 merely implies that you want to use TLS, and thus it’s called “Implicit TLS”, and as I’ve said, this is a stupid name.

Called “FTP+S”, the way “explicit” TLS works, is that the client tells the server when to initiate a TLS session, but it does so on the normal cleartext control channel.

Just explaining this, and understanding how stupid this is, is going to require that we dump out the rest of the entire box of band-aids.

The concept behind explicit TLS is that the client connects to the normal FTP port, TCP/21, and can then choose whether it wants TLS or not. I guess this is good for firewall administrators, but horrible during a security audit.

Explicit TLS Problem #1 – AUTH TLS

What’s the first thing you do when you connect to an FTP server? YOU PRESENT YOUR CREDENTIALS, which consist of a username and password (and there are other options, but let’s stop there).

So, if you have to connect, then auth, and THEN ask for TLS, guess what? Your authentication (sending your credentials to the server) happens in cleartext!

So, our first big fat trauma bandage (we’re past band-aids now) is to allow the client to ask for TLS protection for the authentication process. Which, it wouldn’t need at all, if the client had simply connected on port 990, which is explicitly encrypted.

Explicit TLS Problem #2 – GO TLS

Once the client authenticates, which might be in cleartext, the client can request a TLS session with “GO TLS”. Which is another big fat trauma bandage that wouldn’t be needed if so-called explicit TLS simply didn’t exist.

If the client chooses to forgo TLS, it can simply download all of the sensitive information it wants in cleartext, over the internet, unless the server is configured to dis-allow this.

Explicit TLS Problem #3 – Auditing

Most FTP server software allows the administrator to set a policy which requires certain users or groups to both authenticate securely, and requires that files and other information must be downloaded securely. The problem with this approach is that the administrator has to actively go in and set a policy, and if he doesn’t know any better, a flawed or incomplete policy definition would allow a client to do whatever the heck the client wants, including both authenticating and downloading files in cleartext.

Or, you could just simply disable explicit TLS in favor of FTPS, which is, by definition, ALWAYS secure. Big fat trauma bandage #3.

And, since there are no standards for FTP, sometimes the control channel is encrypted, and sometimes it isn’t.

Which brings us to Explicit TLS Problem #4 – NO STANDARDS

Some people are going to read this, and flip their shit. They are going to throw their hands in the air and shake their fists at the monitor, and scream, “THAT’S NOT HOW IT WORKS!!!”

Let me revisit a couple of salient points for you:

- THERE IS NO NEED FOR IT. If you connect on port 990, everything you do is secure. Always and without fail. As a matter of fact, just disable port 21 because there is no need for it at this point, or you can have a simple daemon (Unix for “service”) that responds on port 21 with “You are required to connect to this server using TLS on port 990”.

- THERE ARE NO STANDARDS. “YES THERE ARE!!!” I hear you shout. Right… there are MANY standards, which is the same as having NO standards. “But, if you comply with the latest RFC”, I hear you meekly state… AGAIN, if you just disable cleartext FTP and use port 990, then everything is always secure, all the time.

Tip: Disable both cleartext FTP and so-called explicit TLS. Just block TCP/21 all together. Problem solved.

SSH – A Far Superior Option

At about the same time SSL was being developed by Netscape and RSA, “Secure SHell” (SSH) was also under development.

Although SSL/TLS is ideal for protecting more modern protocols like HTTP and SMTP that use a single TCP/IP port, even if you protect FTP using TLS, it doesn’t fix the myriad of other problems caused by the fact that FTP is an extremely old and inefficient standard.

SSH was designed to standardize and protect what was (at the time) an unwieldy set of remote access protocols:

- Remote Shell (rsh)

- Remote Login (telnet, rlogin)

- Remote Execution (rexec)

- Remote Copy (rcp)

- And, yes, even FTP, which by 1995, everyone generally agreed that FTP sucks

In addition to these capabilities, SSH allows port forwarding and redirection for VPN / pseudo VPN, where the client can redirect a local port (on the client side) to the server, or ask the server to forward the port to another host.

For example, you could connect to an SSH host over the internet, which only requires TCP/22, and is completely encrypted. From there, you could:

- Run terminal commands

- Launch file transfer (SFTP)

- Copy a file (SCP)

- Forward a client port to the server (port redirect). For example, you could run a local X server, and launch a program on the server that connects to your local display.

- Forward a client port through the server to some other host on the network (port forwarding). For example, let’s say you have a Windows server on the same network as your Unix server. You could tell your Unix server to forward TCP/3389 to the Windows host, and then use Windows Remote Desktop to connect through the Unix server to the Windows server.

All of this is possible using only ONE TCP port, TCP/22, because SSH supports multiple bidirectional channels. This means no more data ports, and all channels are explicitly encrypted by SSH.

In addition to all of this, SSH also supports certificate-based authentication, allowing an automated process to connect remotely to another server without having to embed credentials in a script file.

Tip: SFTP always denotes SSH-based file transfer, which only uses a single port (TCP/22). FTPS always denotes SSL-based FTP, while FTP+S implies explicit TLS.

Tip: SFTP is superior in all regards to any form of FTP, all of which should be deprecated and disabled.

FTP and Firewalls

I promise, we’re in the home stretch now.

If you were personally wearing all of the band-aids that we discussed regarding FTP, you would look like the Mummy.

But, like Clark’s “Jelly of the Month” subscription, given to him by his boss in National Lampoon’s Christmas Vacation, FTP is the shitty gift that just keeps on giving, the whole year ’round.

The problem with firewalls is that pesky data connection.

We’ve already looked at PASV mode as a work-around, when the client is behind a firewall.

HOWEVER, there is yet another problem.

Most firewalls perform Network Address Translation (NAT).

So, on the private side of the firewall, the client’s IP address might be 192.168.1.101.

For reference, any of the following addresses are covered by RFC1918, and are considered non-routable on the internet. They are exclusively used for private networks, and RFC1918 is the reason we didn’t run out of IP addresses 20 years ago, because I can have 65,000 devices connected to my private network using 192.168.x.x, and so can you (at your house, on your own network). Because the two networks never talk to each other, there is never a problem. My firewall performs NAT, allowing all of my devices to share a “real” internet address, and so does yours. If I have to connect to your server, you would configure your firewall to forward the connection to whatever host is appropriate on your internal network, and all I have to know is your “real” internet address.

RFC 1918 addresses:

- 10.x.x.x

- 192.168.x.x

- 172.16.x.x through 172.31.x.x

Most home networks use 192.168.1.x, and most big companies use 10.x.x.x.

Some companies have started using 100.x.x.x, which is defined for intra-carrier use, but this is non-standard, and not recommended.

So, back in FTP-land, your client connects to a server, goes through the AUTH process, and then issues the PORT command, telling the server how to connect the data channel.

BUT, you’re on your home network, and your client’s IP address is 192.168.1.101, and your firewall NATs this to a “real” IP of 50.60.70.2, which is assigned by your ISP. So if your client issues PORT (192,168,1,101,x,y), the server will try to connect to 192.168.1.101, which we just discussed is non-routable on the internet.

And this is where the client-side firewall “fixup” comes in to play. Since the firewall knows that TCP/21 is used for FTP, it looks at the traffic, sees the PORT command, and changes it to PORT(50,60,70,2,x,y) and then listens on port x,y. When the server connects to the firewall’s outside IP address on port x,y, it forwards the data connection to the client at 192.168.1.101.

All of this needless effort is called a “fixup” – yet one more band-aid that keeps FTP alive.

“So”, you might ask, “How does this work if the data is encrypted, and the firewall can’t see the PORT command?”

Glad you asked! It doesn’t! In this case, the SERVER must be configured to ignore the address in the PORT command, instead, responding to the client’s apparent source IP.

Yet one more thing I hate about FTP – if you use TCP/990, the server must be configured to force the client to use PASV mode, which is OK because “Active” mode shouldn’t exist anyway.

Or, you could chuck the whole thing and use SSH, which only uses one TCP port, is always encrypted, and always works.

On the server side, you have the same problem.

If the client switches to PASV mode, the server responds with its own IP address, which the firewall must “fix up” to be the real internet address, or the CLIENT must be configured to ignore the IP address in the PASV mode response, and instead use the server’s apparent IP address.

BUT, if the client connects on 990 and uses PASV (which the client MUST do, because they are presumably behind a firewall), then the firewall can’t see the server’s response, and can’t “fix it”.

So, when the client is behind a firewall and connects to the secure port, the server must do TWO things:

- Force the client to use PASV mode

- Respond with the translated “real” internet address, because the firewall can’t “fix” it

However, you might have one FTP server that serves files for both internal and external clients – in this case, either the FTP server has to know which subnets are local so that it can respond with a private IP address, or you have to run two instances of the FTP server, where one responds with the internet-facing address and the other responds with the local address.

OR, chuck the whole thing and run SSH. SSH doesn’t have these problems because it uses ONE port.

Conclusion

FTP is a nearly-50-year-old protocol with no standards and no security.

FTP Sucks. If you have to support it, try to get the other side to use SFTP, which is part of the SSH suite, and is technically-superior in all regards to SSL-based FTP.

Failing that, don’t use explicit FTP, which was designed by ass-hats. Instead, use the explicitly-implicit SSL-based FTP on port 990. BUT, make sure your server is configured to force the client to use PASV mode, and to return the server’s translated (“real”) address rather than its own local address.

Make sure everything you do is in BIN mode, unless you are dealing with an IBM mainframe, because IBM mainframes suck, and are the main reason FTP still exists.

Not that I would ever encourage drastic behavior, but I’m just sayin’, if you were to murder your company’s mainframe guy, they probably couldn’t find a replacement, and would be forced to get rid of the mainframe, and you could just turn off FTP. Just sayin’.