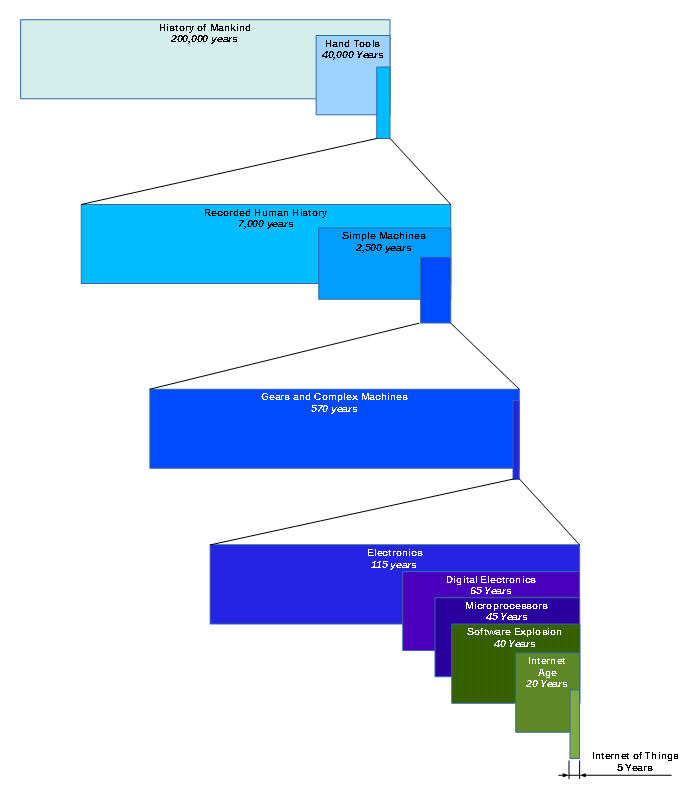

Science generally agrees that “mankind”, homo sapiens, is about 200,000 years old.

During that span of time, technology has advanced at an ever-increasing rate, but there have been only a small handful of innovations – fundamental changes – that altered mankind’s ability to express itself technologically.

Table of Contents

Hand Tools

Man’s first tools were those he could hold.

There is evidence to suggest that man and his predecessors used rocks as hammers, and produced cutting and stabbing weapons by carefully chipping flint rocks.

Although producing these tools required skill, their effectiveness was based on efficiently transferring force using hard materials, and making a sharp tip or blade capable of concentrating that force in to a tiny area.

From about 35,000 BC, man began to add handles to tools and weapons, allowing the wrist to be used as the pivot of an early lever, using the length of the handle to amplify the force of a swing.

A basic wooden spear could be fashioned using a flint knife and porous rock, and later, smaller sharpened stone tips could be affixed to the spear to inflict more damage and increase flight stability.

Axes, hammers, spears, and knives.

In those, we can see the inkling of basic machines.

From 200,000 BC to about 5,000 BC, every technology produced by man consisted of wedges and second class levers, created from objects we could pick up and hold in our hands.

Simple Machines

“Those pyramids didn’t build themselves”

We suspect that the Egyptians, circa 5000 BC, were capable of leveraging (pun intended) simple machines to create the pyramids.

The wheel (and axle) is one of the earliest simple machines, allowing a horizontal force to be used more efficiently by reducing friction resulting from the force of gravity on the load against the ground. We suspect that the Egyptians used logs as rollers (an early wheel) to move huge sandstone blocks, some as large as 20 tons, by reducing friction between the block and the ground. Wood blocks or small stones were probably used as fulcrums to tip and rotate large blocks using wooden lever arms, to move them in to position.

Much later, the Egyptians and Romans used the wheel for chariots and wagons.

In ancient Greece, Archimedes was the poster boy for the lever (trades distance for force when rotated), inclined plane (distributes vertical force over a larger horizontal distance), screw (distributes force over a larger axial distance when rotated), and pulley (trades linear distance for force; redirects a linear force).

These machines were used to build great stone structures, pump water, and build and sail ships.

Amazingly, every machine man produced from about 5,000 BC until the mid 1450’s AD was based on the wedge, wheel and axle, lever, inclined plane, screw, and pulley.

Prior to factories and mass-production techniques, simple machines were created using hand tools.

Gears and Cams

The earliest crown gears and “cage” gears were simply wheels with perpendicular posts mounted around the wheel’s outer edge, parallel to the rotation axis.

Crown gears were able to engage other crown gears, to transmit torque, or change the torque ratio between components.

The first true gears were also wooden, acting as a combined wheel, axle, and lever. Because they were wooden, and wood is a soft material, early gears needed to be quite large, in order to be sturdy.

Later, advances in metallurgy allowed gears to be strengthened and eventually, miniaturized.

A cam is a wheel whose outer radius changes based on the angle of rotation. “Lobes” (bumps) on the outer surface of a cam can be used to actuate a function within a machine, or synchronize multiple functions. When combined with the lever, a cam can translate axial motion in to linear motion.

Gears and cams came in to use in the 1500’s (AD), and eventually evolved in to elaborate clock works, such as those used to run the automations incorporated in to some of the Faberge eggs, created in the 1890’s.

From giant factories to tiny cuckoo clocks, gears and cams, along with the simple machines, were the limiting factor in man’s ability to express himself technologically.

Although the method of powering these devices evolved and varied, including weights, pendulums, springs, water wheels, steam wheels (turbines), steam engines, gas engines, and eventually, electric motors, the basic mechanical principles remained the same from the 1500’s until the 1930’s.

Although the first gears and cams were created using hand tools, later generations were created using machines that, themselves, were driven by gears and cams, eventually leading to mass production techniques.

The advent of the printing press, created from gears and screw threads, led to the first mass-distribution of information. Prior to the printing press, books and papers had to be meticulously hand-copied, a slow and error-prone technique.

Meanwhile, looms, early machine tools, water wheels, and steam engines are all based on the gear, cam, and simple machines (lever, screw, pulley, etc…) but resulted in the ability to mass produce and mass distribute fabric, food, and standardized parts across the world. A factory is essentially a big machine that produces stuff, carried across the world by big machines such as boats and trains, that carry stuff.

Electronics

As a direct result of Faraday, Tesla, and Edison, the budding field of electronics allowed new types of machines to be created, that use electronic signals in place of mechanical ones.

Electronic machines could more reliably emulate mechanical ones, such as clockworks, but could also provide new capabilities such as particle and field emissions, and electromagnetism.

The first practical and widely-used application of electronics was the Morse telegraph, a method to nearly-instantly transfer simple information in both directions over long distances using a pair of wires that carried a current to an electromagnet at the remote end. When repeatedly opened and closed using a relay “key”, this system was used to transmit letters and numbers encoded as dots and dashes. Invented in the 1830’s, the telegraph was widely used and deployed by the 1860’s.

By the early 1900’s, radio carried voice and data signals without the need for a wired infrastructure, and remained the primary method for long-distance communication for decades.

In the early 1940’s, advancements in electronics led to the development of radar and automated computers, both of which were significant to the Allies winning World War II.

After World War II, the first “general-purpose” computers used electronics to interpret and process instructions, to perform functions such as calculating artillery trajectories.

The ability to combine electronic components evolved with the advent of the circuit board, eliminating elaborate frame and wiring schemes to interconnect components.

From the 1900’s to the 1960’s, the most sophisticated machines man could build, were built with analog electronics.

Early electrical and electronic experiments depended upon products such as conductive wire, created using mass-production techniques that leveraged gear-and-cam “factory” technology.

Early electronic components were created using electro-mechanical machines (mechanical machines driven by electric motors), and later generations were created using electronically-controlled machines, allowing greater precision, faster production rates, and lower defect rates.

The use of radio and television for communication allowed nearly-instantaneous dissemination of information to multiple recipients over long distances.

Digital Electronics

Invented in the 1950’s, the transistor ushered in the wave of digital electronics.

By the 1960’s Metal Oxide Semiconductor transistors (the modern transistor) were being mass produced, and were commercially-available.

Displacing mechanical switches, relays, and vacuum tubes, transistors are “solid-state” (no moving parts), allowing operation at much higher switching frequencies, with significantly higher reliability over analog circuits.

High-speed, digitally-operated, automated phone switches facilitated the Public Switched Telephone Network (PSTN), an interconnected network of telephone switches across North America, allowing any person with a phone to dial a number, and connect automatically through the switch network to any other person with a phone, anywhere in North America. This allowed for the nearly-instantaneous, two-way transfer of information between any two people on the phone network, without the need of expensive hardware, over distances that far exceed the limits of a radio transmission.

Digital electronics also led to more sophisticated general-purpose computers — machines that could process hundreds of instructions per second, widely used for many military and business applications.

Early transistors were produced using analog, electronically-controlled machines. Later, machines controlled by digital electronics yielded even greater precision, allowing for greater miniaturization and increasing density, which led to the integrated circuit (IC) — many transistors and other electronic components miniaturized and incorporated in to a single, tiny chip.

Transistor-driven, hand-held devices, such as radios and calculators, were much smaller than their analog predecessors, could run on batteries, were easier to mass produce, and were affordable to most consumers.

Microprocessor

Resistor-Transistor Logic (RTL), a way for analog circuits to blend with digital, eventually gave way to Diode Transistor Logic (DTL), which used all digital components, and allowed faster switching speeds. Transistor (to) Transistor Logic (TTL), provided highly-optimized, highly-compact digital switching, and is the basis of all modern computing.

Spanning two decades, the evolution of integrated circuits progressed to the point that TTL (Transistor-Transistor Logic) was common by the 1970’s.

TTL enabled the first generation of “micro” processors – an all-in-one integrated circuit that combined the various parts of the central processing unit of a computer, which previously would have been composed of separate components wired together as part of a computer system’s “main frame”.

The microprocessor was a single “chip” (IC) capable of reading information, processing it, and transmitting the output, based on a user-defined program.

Through the 1970’s and 1980’s, tiny, inexpensive microprocessors ushered in a wave of previously-unimaginable devices, from games and toys, to tools and instrumentation.

Larger microprocessors were the basis of the first “microcomputers” – a single-chip, (often) single-board computer, small and inexpensive enough to be a consumer product. Previous generations of computers had multiple CPUs and boards mounted in a “frame” (or cabinet), requiring lots of power. With wide access to computing resources, almost anyone could now write software programs to create new computing applications.

Embedded Electronics

Conventional TTL-based electronics, developed in the 1970’s, continued to be used through the early 1990’s, at which point, it was eventually deemed equally-efficient to embed a tiny microprocessor with a fixed software program (“firmware”), versus designing, creating, testing, and mass-producing a complex hardware TTL circuit.

With embedded controller-based designs, the software is virtual, and can be loaded or modified in real-time, in order to implement or modify the functionality of a physical system – a computer-controlled machine that can be re-programmed on the fly. By the mid-1990’s, devices implementing embedded controllers could be developed in a fraction of the time, for a fraction of the cost.

For the first time, man had developed “hardware” machines that could be built first and designed later.

More precisely, the function of a machine could change over time, implementing new functionality or adapting to changes by simply replacing or upgrading the firmware program used by the microcontroller.

Software

The first computers operated in “hardware”, using plug boards, switches, and dials to configure the function of the machine.

Modern computers process information based on a set of instructions encoded as data. Those instructions constitute a “program” that can be loaded in to memory, or later, erased from memory and replaced with another program.

Due to its mutable nature, these programs are called “software”, versus the immutable nature of “hardware” or hard-wired logic.

Eventually, symbolic programming languages were invented, that a programmer could use for more rapid and effective development of software, compared to the CPU’s native assembly language or machine code.

Most modern software is written using a 3rd-generation language, or “3GL”, that provides an object-based or object-oriented paradigm, custom data types, many built-in functions, and some level of machine-independence. 3GL programs are compiled to native object code (machine code that’s specific to a CPU and architecture), and then linked with external libraries to form a fully-functional executable program.

4GLs (4th Generation Languages), also called scripting languages, provide a completely machine-independent, statically-compiled environment that interprets a program (or “script”) at run time, WITHOUT the need to compile or link prior to execution. One aspect of some 4GLs is the ability to use graphical design tools, which automatically generate an underlying script. Examples include “query by form” used with SQL (a database access language), and some Form / Report design tools whose output is a script that produces the specified design.

Constraint-based programming, sometimes called 5GL, allows the operator to specify a set of constraints and data sets, where the 5GL performs an optimization analysis, or returns a specific answer. The definition of a 5GL is illusive. An example of a 5GL is a “genetic” algorithm, where a specific set of modules are turned on and off randomly, in consideration of solving a specific problem, and the “strongest” algorithms are “bred” (combined) in various ways, and then subsequently randomized to form the next generation. Each generation leads to a more suitable algorithm.

Conceptualized by Alan Turing in the late 1930’s as part of a mathematical concept called the “Turing Machine”, a general-purpose computing device capable of interpreting data as instructions, and manipulating data, the importance of “software” is that a general-purpose machine can perform a wide variety of functions without the need to hard-wire each function.

Computers grew, with ever-increasing memory, secondary storage, and computing power, allowing for larger, more sophisticated software programs. New types of displays, such as plotters and graphics terminals, and input devices such as the mouse, scanner, and digitizer, allowed for increasingly sophisticated software, and entirely new applications that couldn’t exist in the mechanical world.

The ever-increasing sophistication of software platforms and tools brings new levels of accessibility and rapid development. As an example, I’m typing this article in to my web browser, running a 4GL set of javascript functions, that interpret the data I’m typing, and send it to another 4GL set of PHP functions running on a web server instance, interpreted by a native executable program running on Linux, developed using a 3GL.

The Largest Machine

Initially, computers were interconnected through terminal lines. Either terminals or microcomputers being used as terminals could connect to a large, central computer system. These central computer systems could communicate with each other, only if a terminal line was available between them. This required a huge “mesh network” of physical connections, allowing central computers to intercommunicate.

Using the PSTN (Public Switched Telephone Network), these central computers could be interconnected, using modems, over long distances.

Eventually, advances in microcontroller technology created the ability to build packet-switched networks, where any computer on a network, can communicate with any other computer on the same network, allowing each computer system to maintain many fewer physical connections for intercommunication.

Later, routers with more powerful embedded controllers connected multiple Local Area Networks (LANs) in to Wide-Area Networks (WANs). Packet-switched networks could be interconnected using routers connected to the phone system, using faster modems powered by microcontrollers, leveraging faster phone switches capable of delivering greater fidelity and faster data rates. High-speed, dedicated data lines could transmit hundreds of kilobits per second between LANs, using microcontroller-based routers.

The largest, most complex machine ever built by mankind is a Wide Area Network with hundreds of millions of connected devices located all over the world, covering nearly every populated area on the planet, and it’s known as the internet.

The internet is a machine, where almost anyone on the planet can do the following:

- Access the machine from almost anywhere on the planet using microcontroller-based hardware

- Extend the machine’s data base by publishing content using 4GL applications

- Extend the functionality of the machine itself by creating new types of applications, by writing 4GL software

As older components are replaced with newer ones, bandwidth and computing power within the machine continue to expand at a linear rate, known as Moore’s law, while the machine itself continues to expand in scope as companies bring new datacenters online to support new applications, resulting in compound, exponential growth.

Cloud-Based Computing

Originally, software ran on centralized computers, and users accessed the software through a terminal, which was like a portal where the user could interact with the software, even though it might be running hundreds of feet (or even hundreds of miles) away!

In the microcomputer era, software was something you bought at the store, or downloaded from another computer, to run locally on your own computer.

Initially, the internet was basically used to download and view files. The earliest web browsers would download a file, and then display its contents.

Early web-based applications ran on servers connected to the internet, and, like early terminal applications, the server would simply render the results, which your browser downloaded as a file and displayed to you.

Later, technologies such as JavaScript and Adobe Flash allowed applications to run inside the browser, while simply exchanging data with a server “out in the cloud” somewhere. This brought us the first true “web applications”, where the “web browser” was the platform, and 4GL software was downloaded dynamically and executed locally.

Initially, internet-based applications were distributed, where the software driving the application’s logic was stored in a few specific data centers, and executed on demand when a user invoked it.

In a cloud computing model, applications can interact with each other, completely automatically. For example, when you buy something online, the store might automatically update your social networking page.

Features and functionality can be rapidly extended through integration. For example, let’s say you want to build an internet-based application, and you want to include voice recognition functions. Rather than shop, buy, download and install a voice recognition module to run in your own data center, you can simply have your application forward the request to an internet application that already performs those functions.

New types of cloud-based applications are emerging, that allow non-developers to link applications together in new ways, to create software on the fly to meet their needs. IFTTT (If This Then That) allows users to create triggers that monitor an application or internet-connected device, and then perform functions or carry that data over to another application. For example, you can monitor for rain using a weather application, and turn your internet-connected sprinklers off automatically when it rains. All of the logic and processing for these “lifestyle” applications live in the cloud, actuated by simple devices and triggers.

The “Internet of Things” (IoT) is the latest trend, for common devices to be internet-connected, and able to be remotely operated and monitored. Cameras, alarm systems, locks, faucets, light bulbs, switches, thermostats, sprinklers, and barbecue thermometers are some simple examples of common devices that can be linked in to cloud-based applications or controlled remotely from a smart phone.

Cloud-based computing allows people to leverage massive computing resources to do practical things, with capabilities that are beginning to extend beyond the browser.

Conclusion

Man began with hand tools, using hard or sharp stones to transmit force, and later, the handle was added in order to increase force.

Simple machines, the lever, wheel, pulley, wedge, inclined plane, and screw, took us from ancient Egypt and Greece up to the Renaissance.

Machines built with gears and cams took us from the mid 1400’s to the late 1800’s

Electronic machines began with the telegraph and radio, and by the mid 1900’s, electronic circuits could displace the function of most mechanical machines.

Digital electronics allowed machines to be miniaturized and mass-produced, and culminated in the microprocessor.

Microprocessor-based microcomputers paved the way for massive software development, while the microcontroller eventually displaced TTL-based circuits.

The microcontroller allowed computers to be connected to each other, leading to the internet, the largest and most complex machine ever created by man – a machine whose functions are ever-expanding and evolving.

Software, initially running on standalone machines, can now be distributed across the internet, called cloud-based computing, paving the way for new types of devices and applications that “think in the cloud”.

Each generation of machines paved the way for increasingly more precise manufacturing capabilities, allowing mass production, and eventually leading to the creation of the next generation of machines, culminating in the capability to create new software and applications, on the fly, in the cloud.

We’ve come a long way from hitting things with rocks, to being able to verbally ask my smart phone to set my thermostat.

Why I like this review, that entire the history is expounded in a few paragraphs, so it is not boring.